PhD Theses

Büring, Thorsten

Zoomable User Interfaces on Small Screens - Presentation (2007)

Due to continuous and rapid advances in mobile hardware and wireless technology, devices such as smartphones and personal digital assistants (PDAs) are becoming a truly mobile alternative to bulky and heavy notebooks, allowing the users to access, search and explore remote data while on the road. Application fields that benefit from increased staff mobility are business consultants, mechanical engineers, and doctors in hospitals, for instance. However, a drawback that impedes this development is that the form factor of mobile devices requires a small-sized screen. Hence, given a large data set, only a fraction of it can be displayed. To identify important data the users are typically forced to linearly navigate the off-screen space via scrolling. For large information spaces, this is tedious, error-prone, and particularly slow. In contrast, the concept of zoomable user interfaces (ZUIs) has been found to improve the user performance for a variety of retrieval and exploration scenarios. While ZUIs have been investigated mainly in desktop environments, the objective of this work is to analyze the usability potentials of zooming and panning in a mobile context given the constraints of a small screen and pen-input. Based on a comprehensive review of related work, the reported research is structured in two parts. First, we focus on the development of mobile starfield displays. Starfield displays are complex retrieval interfaces that encode and compress abstract data in a zoomable scatterplot visualization. To better adapt the interface to the requirements of a small screen, we merged the starfield approach with semantic zooming, providing a consistent and fluent transition from overview to detail information inside the scatterplot. While the participants in an informal study gave positive feedback regarding this type of data access, they also showed difficulties in orienting themselves in the information space. We further investigated this issue by implementing a zoomable overview detail starfield display. Thus, while navigating the detail view, the users could keep track of their current position and scale via an additional overview window. In a controlled experiment with 24 participants, we compared the usability of this approach with a detail-only starfield and found that the separate overview window was not able to improve user satisfaction. Moreover, due to the smaller size of the detail view and the time needed for visual switching, it worsened task-completion times. This result led us to implement a rectangular fisheye view for starfield displays. The interface has the advantage that it displays both detail and context in a single view without requiring visual switching between separate windows. Another usability evaluation with 24 participants was conducted to compare the focus context solution with an improved detail-only ZUI. While task-completion times were similar between the interfaces, the fisheye was strongly preferred by the users. This result may encourage interface designers to employ distortion strategies when displaying abstract information spaces on small screens. Our research also indicates that zoomable starfield displays provide an elegant and effective solution for data retrieval on devices such as smartphones and PDAs. The second part of the research deals with map-based ZUIs, for which we investigated different approaches for improving the interaction design. Maps are currently the most common application domain for ZUIs. Standard interfaces for controlling such interfaces on pen-operated devices usually rely on sequential interaction, i.e. the users can either zoom or pan. A more advanced technique is speed-dependent automatic zooming (SDAZ), which combines rate-based panning and zooming into a single operation and thus enables concurrent interaction. Yet another navigation strategy is to allow for concurrent, but separate, zooming and panning. However, due to the limitations of stylus input, this feature requires the pen-operated device to be enhanced with additional input dimensions. We propose one unimanual approach based on pen pressure, and one bimanual approach in which users pan the view with the pen while manipulating the scale by tilting the device. In total, we developed four interfaces (standard, SDAZ, pressure, and tilting) and compared them in a usability study with 32 participants. The results show that SDAZ performed well for both simple speed tasks and more complex navigation scenarios, but that the coupled interaction led to much user frustration. In a preference vote, the participants strongly rejected the interface and stated that they found it difficult and irksome to control. In contrast, the novel pressure and tilt interfaces were much appreciated. However, in solving the test tasks the participants took hardly any advantage of parallel interaction. For a map view of 600x600 pixels, this resulted in task-completion times comparable to those for the standard interface. For a smaller 300x300 pixels view, the standard interface was actually significantly faster than the two novel techniques. This ratio is also reflected in the preference votes. While for the larger 600x600 pixels view the tilt interface was the most popular, the standard interface was rated highest for the 300x300 pixels view. Hence, on a smaller display, precise interaction may have an increased impact on the interface usability.

Butscher, Simon

Reality-based Idioms: Designing Interfaces for Visual Data Analysis that Provide the Means for Familiar Interaction

Due to the increasing amount of data, visual data analysis has become more and more important. Visual data analysis makes it possible to use humans’ mental capabilities to quickly recognize visual patterns and find relevant information and make sense out of them. However, humans’ mental resources are not only required for the actual knowledge generation process but also for the operation of the analysis tools. The interaction with visualizations as data analysis tools is the focus of this dissertation. The fewer resources the user has to spend for operating the tool the more resources are left to interpret the visual representation of the data and make sense out of it. The research approach underlying this dissertation is based on the theory of Reality-based Interaction. Our physical and social environment is full of affordances, rules, and constraints that we are aware of and that frame our thinking and interaction with people and objects. A reality-based interaction technique makes use of this by aligning the operation of a digital system with our physical and social experiences from the real world. The goal is to create user interfaces that seem familiar to the user. In this dissertation user interfaces for the visual analysis of data based on reality-based visualization and interaction techniques are referred to as Reality-based Idioms. Reality-based Idioms often make use of novel input and output technologies, which, compared to traditional desktop systems, leverage the users’ preexisting and entrenched knowledge about the physical and social world to a much greater extent. The technologies examined in this dissertation include multi-touch displays, deformable displays, tangible user interfaces, and head-mounted augmented reality displays. The applied approach reflects the duality of Humancomputer Interaction as a research and design discipline. In the sense of a research discipline, the thesis presents the theoretical foundations that explain when a user interface is perceived as reality-based and thus as familiar and summarizes them in the Model for Reality-based Idiom Design. In the sense of a design discipline, the practical applicability of the model is illustrated by three domain situations. These focus on navigating visual information spaces, filtering large amounts of data and, analyzing multi-dimensional data. In order to address these domain situations, the dissertation presents five Reality-based Idioms. The evaluation of these design artifacts helps to clarify the benefits of reality-based interaction for visual data analysis. With this approach, the work makes an important contribution to closing the gap between visualization and interaction research and to pointing out new ways to facilitate the operation of tools for visual data analysis.

Dürr, Maximilian

Design and Evaluation of ‘Post-WIMP' Systems to Promote the Ergonomic Transfer of Patients (2023)

Nurses frequently need to carry out patient transfers during their daily work. Unfortunately, the manual transfer of patients poses a major risk to nurses’ health. The Kinaesthetics care conception can help to address this problem. It provides a basis to conduct patient transfers so that the health development of nurses and patients is supported while injuries are avoided. However, the existing support for nurses to learn how the Kinaesthetics care conception can aid them in the manual transfer of patients, is low. This thesis investigates how technical systems that make use of post-‘Windows, Icons, Menus, and Pointers’ (WIMP) user interfaces can support the learning of ergonomic patient transfers based on the Kinaesthetics care conception. The presented research fundamentally grounds on theory—including the Kinaesthetics care conception and relevant theoretical work from Human-computer interaction—and an initial qualitative investigation of the context. For the later part, interviews with nursing-care teachers (N = 5) and nursing-care students (N = 27), and four contextual inquiries were conducted. The qualitative investigation led to a set of six implications for the design of technical systems that aim to support the learning of ergonomic patient transfers based on the Kinaesthetics care conception. Based on the theoretical background and the findings from the qualitative investigation, two systems were designed and implemented in the form of research prototypes: (i) KiTT, a tablet-based system to promote the learning of ergonomic patient transfers in the educational context, and (ii) NurseCare a mobile system—based on a smartphone and a wearable—that focuses on the support of learning and application in the work context. To determine how well each of the systems addresses nurses’ needs, research questions were established and each system was evaluated in a mixed-methods user study together with nursing-care students. KiTT was evaluated in a user study with 26 nursing-care students in a nursing-care school. The system can be used by two nursing-care students to learn together. Consequently, the nursing-care students who supported the evaluation participated in 13 dyads. For the evaluation, a part of KiTT—the detection of risky behaviors during the training of a patient transfer—was simulated by use of the Wizard of Oz method. The results of KiTT’s evaluation indicate that the system provides a good subjective user experience adequate to the nursing-school context, a good subjective support for the learning of the Kinaesthetics care conception, and—for a subset of the evaluation participants (n = 18)—that KiTT can promote the ergonomically correct conduct of patient transfers. The results also indicate how a system like KiTT could be integrated into and extend existing practices of support. NurseCare was evaluated in an ‘in-the-wild’ user study with nine nursing-care students. Each participant used NurseCare during multiple days at which the participant worked at a clinic. The results of the evaluation indicate that NurseCare provides a good user experience adequate to the nurses’ work domain, and—with the exception of very stressful days—a good subjective support to promote ergonomic work and a good but limited subjective support for the application of the Kinaesthetics care conception. Furthermore, they reveal how a system like NurseCare could be integrated into and extend existing practices. NurseCare and KiTT are both based on handheld devices which are already widespread and easily available to nursing-care students. Apart from this, the use of Virtual Reality head-mounted displays was explored with ViTT. ViTT is a Virtual Reality system that aims to promote the learning of ergonomic patient transfers in the educational context. This work-in-progress was not evaluated with nursing-care students, yet. As part of this thesis, the created artifact and conceptual extensions are discussed. Overall, this thesis contributes empirical findings of a qualitative investigation of the targeted context, three research artifacts, and findings from the empirical evaluation of two of these artifacts (KiTT and NurseCare). Apart from individual artifacts and empirical findings, the thesis also contributes an integration and discussion of the empirical findings in the form of implications for (i) the use of post-WIMP systems to promote the learning and application of the Kinaesthetics care conception, and ergonomic work, (ii) the design of such post-WIMP systems with a good User Experience, and (iii) the integration of such systems into existing practices of support in nursing-care education. Additionally, (iv) the applicability of the empirical findings to other scenarios, that are not (directly) related to nursing-care education, is discussed. Finally, directions for future work are suggested. The artifacts and findings presented in this thesis may inform the future work of researchers and practitioners to develop systems that promote the ergonomic transfer of patients, as well as supportive systems for other scenarios.

Gerken, Jens

Longitudinal Research in Human-Computer Interaction (2011)

The goal of this thesis is to shed more light into an area of empirical research, which has only drawn minor interest in the field of Human-Computer Interaction so far – Longitudinal Research. This is insofar surprising, as Longitudinal Re- search provides the exceptional advantage compared to cross-sectional research of being able to analyze change processes. Therefore, it incorporates time as a dependent variable into the research design by gathering data from multiple points in time. Change processes are not just an additional research area but are essential to our understanding of the world, with HCI being no exception. Only Longitudinal Research allows us to validate our assumptions over time. For example, a user experience study for an electronic consumer product, such as a TV-set, that reveals how excited people about the device are, should also investigate whether this excitement holds over time, whether usability issues arise after two weeks, and eventually whether people will buy the follow-up product form the same company. Our experience with technology is situated in context, and time is one important aspect of our context – ignoring does not necessarily lead to invalid but often insignificant research. In this thesis, we contribute to the area of Longitudinal Research in HCI in manifold ways. First, we present a taxonomy for Longitudinal Research, which provides a foundation for the development of the field. It may serve both as a basis for discussion and methodological advances as well as a guiding framework for novices who strive to apply Longitudinal Research methods. Second, we provide a practical contribution by presenting PocketBee, a multi- modal diary for longitudinal field research. The tool is based on Android smartphones and allows researchers to conduct remote longitudinal studies in a variety of ways. We embed the discussion of PocketBee in a broader discussion of the diary and experience sampling methods, allowing researchers to understand the context of the tool, the advantages and also the inherent problems. Eventually, we present the Concept Maps method, which tackles a specific issue of Longitudinal Research – the difficulty to analyze changes in qualitative data over time, as these are normally hidden in large amounts of data and sub- ject to the interpretation of the researcher. In the context of API usability, the method allows the externalization of the mental model developers generate. Concept Maps are used for these external representations and by continually updating these maps, changes over time become apparent and the analysis replicable. The thesis will also help researchers to discover further important research areas in this field, as for example the variety of methodological issues that arise with gathering data over time. As the topic of Longitudinal Research has not yet been covered comprehensively in the scientific HCI literature, this thesis provides an important first step.

Geyer, Florian

Interactive Spaces for Supporting Embodied Collaborative Design Practices (2013)

Digital technology is increasingly influencing how design is practiced. However, it is not always successful in supporting all design activities. In contrast, especially informal collaborative design methods that are typically practiced early in the design process are still poorly supported by digital tools. Traditional workflows are often altered in negative ways due to a lack of fluency and immediacy or through incompatibility with social dynamics and embodied actions. Many design practices are thus still better supported by relying on traditional physical tools that better facilitate design as embodied, situated practice. At the same time however, digital technology is inevitable in today’s work ecology. As a result of this tension, designers frequently have to move between digital and physical tools.

This thesis takes this critical gap as a central motive for investigating how digital tools can be designed to both preserve and augment existing material and social practices of collaborative design activities. By approaching potential solutions to this gap, the state of digital design and creativity support tools is advanced to better suit embodied design practices. Within this thesis, this research question is approached through the design and evaluation of new digital tools within four themes of design practice: 1) externalization, 2) reflection, 3) collaboration and 4) process. Further, as a framework to this research, a structured design methodology is developed that specifically addresses the goal of integrating digital technology with embodied design practices. This tradeoff-driven methodology is then applied to different concrete cases to demonstrate its applicability in context.

Within three case studies, new concepts for supporting collaborative embodied design practices are presented. Within the first study, the interactive space AffinityTable is discussed which was designed to support the collaborative design method affinity diagramming. A second case study presents the tool IdeaVis for supporting collaborative sketching sessions. In a third case study, support for the particular activity of documenting and organizing design artifacts is explored with the interactive space ArtifactBubbles. Taken together, the case studies deliver a representation of collaborative embodied design practices. Each case study provides a detailed introduction and justification of the case selection and documents the application of the proposed tradeoff-driven design methodology throughout analysis, design and evaluation.

As a result, this thesis delivers three types of contributions. As a first contribution, the thesis describes a novel design methodology than can be applied to related design problems. As a second contribution, new design concepts and interaction techniques are introduced that can be reused and adapted by other researchers or practitioners. Eventually, empirical data that relates to the effect of the designed methods on properties of design practice is provided. The thesis concludes with abstract design guidelines that bring together all contributions for reuse by researchers and practitioners.

Gundelsweiler, Fredrik

Design-Patterns zur Unterstützung der Gestaltung von interaktiven, skalierbaren Benutzungsschnittstellen (2012)

The design of user interfaces (UIs) is a creative, iterative process supported by many tools. Specifications, guidelines, and styleguides as they are currently used in companies are not fulfilling the requirements of the more and more rapid and flexible software development. Current software applications show a high degree of complexity in terms of their UI, data connectivity, visualization, search and interaction techniques. The high degree of complexity arises from the combination of these areas and the growing expectations of end users. Especially scalable user interfaces (zoomable user interfaces) are said to improve the usability and user experience according to research studies. Therefore the idea of patterns, which was originally developed in architecture by Christopher Alexander, was transferred to the domain of human-computer interaction (HCI). This resulted in different formats and approaches to integrate patterns into the software development process. These approaches are very heterogeneous and the documentation format is limited on textual descriptions and images. In this work, a pattern documentation format is developed showing how interactive and multimedia elements can be used to describe patterns. The use of patterns promises to optimize the development process in terms of quality, effectiveness and efficiency. The patterns are used to support the phases of requirement analysis, conceptual planning, design and development. Interests in the design of usable products are positive effects such as increased creativity, use of established solutions that improve communications and the emergence of a knowledge base to solve recurring design problems. On the basis of three case studies (image search, social networks and electronic product data management) the combination of current visualization, filters and interaction techniques are examined. The case studies resulted in several applications that were developed and evaluated iteratively. Finally, several novel visualization and interaction patterns are derived from these applications. The case study on search images turned out to have the most innovative aspects and possibilities for the extraction of novel patterns. The patterns are then presented for documentation, communication and application in a specially designed interactive multimedia pattern browser. The qualitative evaluation of patterns with UI designers leads to the result that the patterns are applicable and usable, but there is still potential for optimization. At the conclusion of the work, the developed pattern format is reflected in terms of a common pattern language for HCI. Finally it must be stated that the technical development leads to the emergence of new patterns. These could be documented with the new interactive multimedia format, but are actually leading to a further diversification of HCI pattern languages.

Heilig, Mathias

Exploring Reality-Based User Interfaces for Collaborative Information Seeking (2012)

Information seeking activities such as searching the web or browsing a media library are often considered to be solitary experiences. However, both theoretical and empirical research has demonstrated the importance of collaborative activities during the information seeking process. Students working together to complete assignments, friends looking for joint entertainment opportunities, family members planning a vacation, or colleagues conducting research for collaborative projects are only few examples of cooperative search. However, these activities are not sufficiently supported by today’s information systems as they focus on individual users working with PCs.Reality-based User Interfaces, with their increased emphasis on social, tangible, and surface computing, have the potential to enhance this aspect of information seeking. By blending characteristics of real-world interaction and social qualities with the advantages of virtual computer systems, these interfaces increase possibilities for collaboration. To date, this phenomenon has not been sufficiently explored. This thesis is an analytical investigation of the improvements and changes reality-based user interfaces can bring about in collaborative information seeking activities.To explore these interfaces, a realistic scenario had to be devised in which they could be embedded. The Blended Library has therefore been developed as the context for this thesis. In this vision, novel concepts have been developed to support information seeking and collaborative processes inside the physical libraries of the future; the Blended Library thus represents an appropriate ecosystem for this thesis. Three design cases have been developed that provide insight into how collaboration in information seeking may be influenced by reality-based user interfaces. Subsequently, two experimental user studies were carried out to attain more profound insight into the behavior of people working collaboratively, based on the type of interface in use.

Jenabi, Mahsa

PrIME Primitive Interaction Tasks for Multi-Display Environments (2011)

Multiple displays are commonly used in meetings and discussion rooms. These settings provide new challenges for designing fluid interaction across displays. For instance, a list of primitive interaction tasks in such environments has not been investigated in literature. In addition, past researchers have carried out their research using mobile phones as input devices to control displays. However, it is still unclear whether an input device with an integrated display can improve the performance of multi-display interaction tasks. This research contributes to the design space of the multi-display environments (MDE) in two aspects. Its first contribution is theoretical, as it lists the primitive interaction tasks for MDEs. The indicated primitive tasks are: object selection, object transfer and focusing-brushing-linking in collaborative MDE, as well as visualization gallery as an extension to the list of primitive tasks for single-display interaction. This list helps interaction designers, who design new input devices, to be aware of the tasks that the device should support. Furthermore, it can be used as criteria to evaluate novel input devices. The second contribution of this research is practical and it is aimed at answering the following research questions, which the state-of-the-art techniques have left unanswered. Does a mobile input device with an integrated display improve performing cross-display interaction tasks? The idea behind this research was to compare two devices, one of which has an integrated display whereas the other does not. To answer the research question, a working prototype, called PrIME prototype, was implemented. This was done using a laser-pointing device and an iPhone as two alternative input devices for MDEs. A user study was conducted to compare these devices according to their performance, as well as for the users’ subjective feedback. The outcome of the experiment indicated that the iPhone is better suited at selecting overlapping objects, objects that are small, and objects which are at a distance. Although it was hypothesized that the laser-pointing device might be quicker than the iPhone to select larger objects, the paired sampling t-test did not prove any significant difference between the two. Transferring one object from one display to another was significantly quicker using the iPhone. Surprisingly, the iPhone was not quicker at transferring more objects from one display to two other displays. In fact, the result of the t-test showed no significant difference. It was expected that the iPhone would be quicker in performing more complex tasks, namely where several tasks are done one after another. This was assumed given that the iPhone has a clipboard which can save the selected objects and which the user can carry around. The users’ subjective feedback showed that the iPhone was considered significantly better than the laser-pointing device. This is because it was regarded as easier to use, more accurate for object selection, less tiring to carry and it also responded quicker to the users’ input. Using an iPhone as an input device to control a large display, which GUI and which ordering algorithm would be the most preferable according to the users’ performance and subjective feedback? Three different visualizations were implemented, namely CoverFlow, ZoomGrid and DisplayMap.For the last two visualizations, three different algorithms were used to put objects in order. Therefore, each user tested seven different conditions. A user study was conducted to compare these seven conditions. As the result of the experiment indicated, CoverFlow visualization is significantly slower than the other two GUIs, therefore, it is not appropriate for these sort of tasks. DisplayMap and ZoomGrid were both similarly fast. In fact, no significant difference was indicated. According to the subjective feedback by the users, the ZoomGrid GUI was preferable, because it showed a good overview of the existing objects. What application domain can benefit from the PrIME prototype? To show the application of the PrIME prototype concept in a real life scenario, CrossStorm prototype was implemented. CrossStorm supports users in brainstorming sessions. Users can make, delete, and move the post-its across displays using an iPhone as an input device. This prototype allowed two users to use two iPhones simultaneously, which gave users the possibility of using more iPhones to share their ideas with other members of their group. Lessons learned from the design and implementation of these prototypes showed the impacts of using a mobile input device with an integrated display for cross-display interaction.

Jetter, Hans-Christian

Design and Implementation of Post-WIMP Interactive Spaces with the ZOIL Paradigm (2013)

Post-WIMP interactive spaces are physical environments or rooms for collaborativework that are augmented with ubiquitous computing technology. Their purpose is toenable a computer-supported collaboration between multiple co-located users that is based on a seamless use of multiple devices and natural post-WIMP interaction.

This thesis answers the research question how designers and developers of such ubiquitous computing environments can be supported to create more usable multi-user and multi-device post-WIMP interactive spaces for co-located collaborative knowledge work.

For this purpose, it first defines concepts such as post-WIMP (post-“Windows, Icons,Menu, Pointer”) interaction, interactive spaces, and knowledge work. Then it formulates the novel ZOIL (Zoomable Object-Oriented Information Landscape) technological paradigm. The ZOIL paradigm is the main contribution of this thesis and consists of three components:

- The six ZOIL design principles that define ZOIL’s interaction style and provide “golden rules” to support interaction designers.

- The ZOIL software framework that supports developers during the implementation of post-WIMP interactive spaces for collaborative knowledge work and enables the realization of ZOIL’s design principles in practice.

- The four example prototypes based on ZOIL that can serve as exemplars for designers and developers likewise.

Each of the six ZOIL design principles is derived from literature of disciplines related to Human-Computer Interaction including Ubiquitous Computing, Information Visualization, Computer-supported Cooperative Work, Cognitive Science, Personal Information Management, and Software Engineering.

Their formulation is empirically validated and extended by own experiences made during applying them in the four ZOIL example prototypes for different application domains (e.g., e-Science, collaborative search, creative design) and the findings from own user studies.

Furthermore, this thesis introduces the new open-source ZOIL software framework for implementing post-WIMP interactive spaces that follow the ZOIL design principles. This software framework is described in its architecture and software design patterns and is evaluated in terms of its usability and practical value for developers in an API usability evaluation study.

Klein, Peter

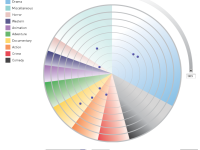

The CircleSegmentView: A User Centered, Meta-data Driven Approach for Visual Query and Filtering (2005)

This work is embedded in an EC funded project named INVISIP. This project covers a diversity of different goals concerning the field of geo-meta-data. The work packages for the human-computer interaction group of the university of Konstanz dealt with the development of a visual meta-data browser, the user centered design process and the exploration of meta-data ranking schema. My research reflects these separate work packages. Despite the fact that the main part of my research is covered by the field of information visualization, it also includes large parts of research in the fields of usability engineering and information retrieval. The goal of my research was (and still is) to offer the user of visual information seeking systems a maximized set of relevant data, using the most efficient and effective way. Both straight search and exploring should be supported in a satisfying and enjoyable approach. Therefore a user-centered design process is inevitable. Beyond this, an optimum must be known about the data (so called meta-data) and be offered to the user, so that users decision-making is based on the best possible knowledge. As a rsum of my work ther is the prototype of a visualization that is capable of offering dynamic query features as well as visual filter facilities. Additionally I present a model for the effcient implementation of visual information-seeking systems based upon meta-data and a user-centered design process. This research is supplemented by a ranking scheme for meta-data, which takes the users knowledge as well as the users search behavior into account. This will lead to more effective and more efficient information-seeking systems.

König, Werner A.

Design and evaluation of novel input devices and interaction techniques for large, high-resolution displays (2010)

Large, high-resolution displays (LHRD) provide the advantageous capability of being able to visualize a large amount of very detailed information, but also introduce new challenges for human-computer interaction. Limited human visual acuity and field of view force users to physically move around in front of these displays either to perceive object details or to obtain an overview. Conventional input devices such as mouse and keyboard, however, restrict users` mobility by requiring a stable surface on which to operate and thus impede fluid interaction and collaborative work settings. In order to support the investigation of alternative input devices or the design of new ones, we present a design space classification which enables the methodical exploration and evaluation of input devices in general. Based on this theoretical groundwork we introduce the Laser Pointer Interaction, which is especially designed to satisfy the demands of users interacting with LHRDs with respect to mobility, accuracy, interaction speed, and scalability. In contrast to the indirect input mode of the mouse, our interactive laser pointer supports a more natural pointing behaviour based on absolute pointing. We describe the iteratively developed design variants of the hardware input device as well as the software toolkit which enables distributed camera-based tracking of the reflection caused by the infrared laser pointer. In order to assess the general feasibility of the laser pointer interaction for LHRDs, an experiment on the basis of the ISO standard 9241-9 was conducted comparing the interactive laser pointer with the current standard input device, the mouse. The results revealed that the laser pointer‘s performance in terms of selection speed and precision was close to that of the mouse (around 89% at a distance of 3 m), although the laser pointer was handled freely in mid-air without a stabilizing rest. Since natural hand tremor and human motor precision limit further improvement of pointing performance, in particular when interacting from distant positions, we investigate precision enhancing interaction techniques. We introduce Adaptive Pointing, a novel interaction technique which improves pointing performance for absolute input devices by implicitly adapting the Control-Display gain to the current user’s needs without violating users’ mental model of absolute-device operation. In order to evaluate the effect of the Adaptive Pointing technique on interaction performance, we conducted a controlled experiment with 24 participants comparing Adaptive Pointing with pure absolute pointing using the interactive laser pointer. The results showed that Adaptive Pointing results in a significant improvement compared with absolute pointing in terms of movement time (19%), error rate (63%), and user satisfaction. As we experienced in our own research, the development of new input devices and interaction techniques is very challenging, since it is less supported by common development environments and requires very in-depth and broad knowledge of diverse fields such as programming, signal processing, network protocols, hardware prototyping, and electronics. We introduce the Squidy Interaction Library, which eases the design and evaluation of new interaction modalities by unifying relevant frameworks and toolkits in a common library. Squidy provides a central design environment based on high-level visual data flow programming combined with zoomable user interface concepts. The user interface offers a simple visual language and a collection of ready-to-use devices, filters, and interaction techniques which facilitate rapid prototyping and fast iterations. The concept of semantic zooming nevertheless enables access to more advanced functionality on demand. Thus, users are able to adjust the complexity of the user interface to their current needs and knowledge. The Squidy Interaction Library has been iteratively improved alongside the research of this thesis and though its use in various scientific, artistic, and commercial projects. It is free software and is published under the GNU Lesser General Public License.

Mann, Thomas

Visualization of Search Results from the World Wide Web (2001)

This thesis explores special forms of presentations of search results from the World Wide Web. The usage of Information Visualization methodologies is discussed as an alternative to the usual arrangement in form of a static HTML-list. The thesis is structured into four main parts. The first part deals with information seeking. It presents ideas from the literature on how to structure the information seeking process and some results from studies of how people search the Web. For the second part visualization ideas, metaphors, techniques, components and systems have been collected. The overview focuses on the visualization of queries or query attributes, document attributes, and interdocument similarities. The reference model for visualization from [Card, Mackinlay, Shneiderman 1999] is used to discuss differences between certain techniques. Visualization components from a number of areas, usage scenarios, and authors are presented using a consistent search example wherever possible. The part about Information Visualization also includes a discussion of multiple coordinated views and some results from empirical evaluations of visualizations by other authors. The third, empirical part of the thesis presents the results of an evaluation of five different user interface conditions of a local meta search engine called INSYDER. An overview covering the INSYDER project in general, the system architecture, and the development of the implemented visualization ideas is included. In a test with 40 users effectiveness, efficiency, expected value, and user satisfaction were measured for twelve tasks. Evaluated user interface conditions were HTML-List, ResultTable, ScatterPlot plus ResultTable, BarGraph plus ResultTable, and SegmentView plus ResultTable. The SegmentView included TileBars and StackedColumns variants. The traditional presentation in the form of an HTML-List performed best in terms of effectiveness and efficiency. In contrast to this, the users preferred the ResultTable and the SegmentView. The last section of the thesis consists of a summary and an outlook.

Memmel, Thomas

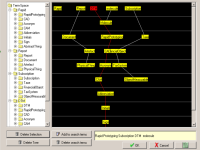

User Interface Specification for Interactive Software Systems Process-, Method- and Tool-Support for Interdisciplinary and Collaborative Requirements Modelling and Prototyping-Driven User Interface Specification (2009)

Specifying user interfaces is a fundamental activity in the UI development life cycle as the specification influences the subsequent steps. When the UI is to be specified, a picture is worth a thousand words, and the worst thing to do is write a natural-language specification for it. In corporate specification processes, Office-like applications dominate the work style of actors. Moving from text-based requirements and problem-space concepts to a final UI design and then back again is therefore a challenging task. Particularly for UI specification, actors must frequently switch between high-level descriptions and low-level detailed screens. Good-quality specifications are an essential first step in the development of corporate software systems that satisfy the users needs. But the corporate UI development life cycle typically involves multiple actors, all of whom make their own individual inputs of UI artefacts expressed in their own formats, thus posing new constraints for integrating these inputs into comprehensive and consistent specifications for a future UI. This thesis introduces a UI specification technique in which these actors can introduce their artefacts by sketching them in their respective input formats so as to integrate them into one or more output formats. Each artefact can be introduced in a particular level of fidelity ranging from low to high and switched to an adjacent level of fidelity after appropriate refining. The resulting advanced format is called an interactive UI specification and is made up of interconnected artefacts that have distinct levels of abstraction. The interactive UI specification is forwarded to the supplier, who can utilize its expressiveness to code the final UI in precise accordance with the requirements. The concept of interactive UI specification integrates interdisciplinary and informal modelling languages with different levels of fidelity in UI prototyping. In order to determine the required ingredients of interactive UI specifications in detail, the different disciplines that contribute to corporate UI specification processes are ana-lyzed in similar detail. For each stage in the UI specification process, a set of artefacts is specified. All stakeholders understand this set, and it can be used as a common vehicle. Consequently, a network of shared artefacts is assembled into a common interdisciplinary denominator for software developers, interaction designers and business-process modellers in order to support UI modelling and specification-level UI design. All means of expression are selected by taking into consideration the need for linking and associating different requirements and UI designs. Together, they make up the interactive specification. The UI specification method presented in this thesis is complemented by an innovative experimental tool known as INSPECTOR. The concept behind INSPECTOR is based on the work style of stakeholders participating in corporate UI specification processes. The tool utilizes a zoom-based design room and white-board metaphor to support the development and safekeeping of artefacts in a shared model repository. With regards to the transparency and traceability of the rationale of the UI specification process, transitions and dependencies can be externalized and traversed much better by using INSPECTOR. Compared to Office applications such as Microsoft Word or PowerPoint, INSPECTOR provides different perspectives on UI specification artefacts, allows the actors to keep track of requirements and supports a smooth progression from the problem-space to the solution-space. In this way, co-evolutionary design of the UI is introduced, defined, and supported by a collaborative UI specification tool allowing multiple inputs and multiple outputs. Finally, the advantages of the approach are illustrated through a case study and by a report on three different empirical studies that reveal how the experts who were interviewed appreciate the approach. The thesis ends with a summary and an outlook on future work directed towards better tool support for multi-stakeholder UI specification.

Müller, Frank

Granularity Based Multiple Coordinated Views to Improve the Information Seeking Process (2006)

This thesis introduces a new concept for visualizing search results from database inquiries. Techniques that are already known and proven are combined in a way that emphasizes the advantages and eclipses the drawbacks of individual features. For this purpose Multiple Coordinated Views and a Granularity Concept based on the idea of a semantic zoom were unified. The approach is not restricted to a specific domain and the visualizations used can be easily adapted. The concept is implemented within the VisMeB framework, a Java-based Visual Metadata Browser that is available in diverse versions. Two main disciplines guide this thesis - Information Visualization and Usability Engineering. Thus, the presented work adheres to this division. The first part of the thesis deals with Information Visualization in general and gives an overview of the interaction techniques used and applications that provided inspiration. This progresses to a detailed description of the multiple coordinated views implemented and the granularity concept. Because the use of multiple coordinated views offers advantages as well as drawbacks, it is necessary to clarify a) whether to use them at all, b) if yes, which visualizations to choose, and c) how the layout and the interaction are defined, which leads to the three-phase model introduced and described in this work the first time. Furthermore, three differently-implemented granularity versions are introduced - the TableZoom, the RowZoom, and the CellZoom, which have a strong influence on the display and user interaction. A detailed description of interaction techniques between the multiple coordinated views subject to the granularity concept closes the first part of the thesis. The second part deals with Usability Engineering or, more precisely, the User-Centered Design Process. The development of VisMeB follows the user-centered design process, which results in early user tests that are responsible for important design decisions. This leads to an enormous advantage compared to systems that did not involve users during the development. After an introduction into the field of usability engineering, the different development steps of VisMeB are considered. Prototyping played an important role and, in combination with user tests, the design process was guided by the results of these investigations. An outlook and a conclusion brings this thesis to a close.

Müller, Jens

Collaborative Augmented Reality: Designing for Co-located and Distributed Spatial Activities (2018)

Augmented Reality (AR) can create the illusion of virtual objects being integrated into the viewer’s physical environment. Researchers have identified numerous application areas that can benefit from AR. Moreover, they have suggested AR displays as tools to support co-located and distributed collaboration. Usually, collaboration requires the collaborators to coordinate their joint actions through conversation. Today, an increasing number of AR-capable smartphones and tablets contributes to the dissemination of AR technology. Yet, research has not widely investigated the question of how ARs can be designed to support conversation and collaboration.

With the goal of informing interaction designers, this thesis studies how the design of ARs can facilitate collaborative, spatial activities. It builds on the assumption that spatial activities require the collaborators to exchange spatial information through conversation, e.g., to guide each other’s attention to a task object in the AR environment. To specify the location of a specific task object, speakers often utilize visually outstanding objects—so-called landmarks—as reference objects. Collaborative ARs, however, do not necessarily provide as many landmarks as some spatial activities would require. Moreover, distributed ARs may only offer physical landmarks that exist in one of the collaborator’s environment exclusively. Such landmarks are, therefore, useless for spatial conversations with a remote AR-collaborator. This work makes two propositions to overcome this issue of missing referencing options during co-located and distributed, collaborative spatial activities. The first proposition consists in adding shared, virtual landmarks to the collaborators’ AR. The second proposition consists in embedding both the task objects and the virtual landmarks into a shared, virtual environment.

Within three controlled lab studies, this thesis evaluates the two propositions and makes three contributions. First, it provides a better understanding of how the propositions shape synchronous, co-located, and distributed spatial referencing. Second, it provides a set of design guidelines on how to support collaborative activities in AR that involve spatial referencing. Third, it informs future research to support AR-based, collaborative spatial activities.

Mußler, Gabriela

Retrieving Business Information from the WWW (2002)

Within this work the retrieval aspects for a system to retrieve Business Information from the WWW are presented. The WWW is seen as an important source for Business Information. In this thesis a number of problems and their solutions are faced. First the results of a study conducted within this work among business decision makers, with the objective to get an insight in their external Business Information need, are discussed. The results show that external information is very valuable for them and that Business Information from the WWW is seen to be an important source. The outcomes of this study and the review of literature led to the development of a visual information seeking system for Business Information called INSYDER. The Information Retrieval aspects of this system are in the focus of this work, whereas the visualisations aspects are discussed in [Mann2002]. It has been intended to develop a system giving a big added-value to the user. For this various components have been designed. The visualisation of the query for an interactive query expansion assists the user in the first step of the information seeking process. The proposed ranking and classification components support the user when reviewing results. Hereby the ranking analyses document by document on-the-fly. This way a comparable ranking, not relying on an overall document collection, has been achieved. For a redefinition of the initial query a relevance feedback option has also been included. The evaluation of the retrieval performance using TREC data shows the system s effectiveness. As a résumé and outlook on future work the presented components and enhancements are rearranged in a sketch of a new Business Intelligence System.

Rädle, Roman

Designing UbiComp Experiences for Spatial Navigation and Cross-Device Interactions (2017)

We are witnessing a considerable growth in number and density of powerful mobile devices around us. Such devices like smartphones and tablets are our everyday companions. If not already at hand, they often wait in our bags and pockets to provide us with a ubiquitous computing (UbiComp) experience. However, most of these devices are still blind to the presence of other devices and performing tasks among them is usually tedious due to the lack of guiding principles.This thesis closes with the gap as mentioned above by investigating in the design and evaluation of spatial and cross-device interactions. As a central theme, presented research fundamentally grounds on embodied practices by exploiting users’ pre-existing practical knowledge of everyday life for human-computer interaction. These embodied practices are often applied subconsciously in our daily activities, which unfolds new - yet unexplored - potentials for fun and joyful UbiComp experiences.

Within this thesis, research is approached through both deductive and inductive reasoning. It begins with a brief history of UbiComp and its overarching vision. Contradicting opinions on this vision are discussed before leading over to recent theories and believes on embodied cognition and models on human spatial memory. This theoretical background eventually thrives arguments for yet unexplored and hidden potentials for spatial and cross-device interactions. Then, the application domain is narrowed down to academic libraries and knowledge work activities. In field studies at the Library of the University of Konstanz, the following two main knowledge work activities, and resonating issues are identified: literature bibliographic search and reading writing across documents. Together with the theoretical background the found issues are transformed into potentials for future knowledge work. Thereby, two fully functional research prototypes, Blended Shelf and Integrative Workplace, were implemented to explore the problem space further and to derive research questions covered in this thesis.

The research questions are tackled through controlled experiments and implementation of low-cost enabling technology. In the first experiment, the optimal size of a spatially-aware peephole display is studied. As a finding, a relatively small tablet-sized peephole display serves as "sweet-spot" between navigation performance, subjective workload, and user preference. Within the second experiment, peephole navigation is contrasted with traditional multi-touch navigation. The findings indicate that users prefer physical peephole navigation over multi-touch navigation. It also leads to better navigation trajectories, shortens task-completion-time, and hints for longer retention of object identities as well as their locations in human spatial memory. Due to the lack of appropriate technology HuddleLamp was developed in an intermediary step. HuddleLamp is a low-cost sensing technology that tracks multiple mobile devices on a table. It allows implementing spatial and cross-device applications without the need to instrument rooms, equip devices with markers or install additional software on them. This technology is used in the third experiment to understand subtleties of cross-device interactions. Findings show that, for cross-device object-movement tasks, users prefer spatially-aware interactions over spatially-agnostic interactions.

Apart from individual findings, this thesis contributes a summary and integration of all findings to general design guidelines for future spatial and cross-device applications. Eventually, these guidelines are applied by researchers and practitioners to develop UbiComp experiences that increase users’ task performance, lower their individual workloads such as mental demand, effort, and frustration. At the same time, these guidelines lead to an increase of the cumulative value when working with multiple mobile devices.

Schwarz, Tobias

Holistic Workspace – Gestaltung von realitätsbasierten Interaktions- und Visualisierungskonzepten im Kontext von Leitwarten (2015)

The technical evolution of information and communication technologies has had a growing impact over the past few years, not least on process management. The increase in automation and virtualization has resulted in a profound structural change in the work environment of control room operators. This development is accompanied by a wider range of functions that place high demands on people s cognitive skills. While operators in earlier, analog process management used to be directly involved in the technical process, today they are at a much greater cognitive distance from the technical process. This makes it difficult for operators to build an adequate mental model, which in turn impacts negatively on the transparency of the processes they have to supervise. The form of interaction predominant in today s control rooms is the conventional desktop system, which further amplifies this effect. Interaction using mouse and keyboard as well as process visualization based on numerous screens, restricts the operator s sensory and physical perception of the process status. Operators are not adequately supported in terms of their innate abilities, such as body awareness.

This thesis deals with the issue of designing a holistic work environment for control room operators, taking due account of new approaches to human-computer interaction. In light of the growing complexity of the human-machine interface, the study first looked at the context of use by conducting a requirements analysis across a variety of control room domains. The aim of the research, which was carried out by means of participative observation and partially structured interviews, was to identify domain-overarching activities and discuss new interaction technologies with the experts. The findings constituted the basis for new interaction and visualization concepts. The model behind the concept development embraces the principles of reality-based interaction, which emphasize both the operators with their skills and prior knowledge and the situation-specific context of the work environment. Based on the interaction paradigm of Blended Interaction, which is guided by reality-based interaction, the workplace design process gives equal consideration to personal and social interaction, workflows, and also the physical environment. Learned and evolution-related characteristics of operators are used to make the interaction more tangible and therefore more understandable. The application possibilities of Blended Interaction are illustrated by design cases for monitoring and diagnostic operations as well as for the manipulation of process variables and their documentation. The concepts of a possible paradigm shift toward new forms of interaction are examined in empirical studies and discussed.

This thesis contributes to research by presenting an innovative methodology for designing the human-machine interface in control rooms. Its practical applications are based on empirical data. Blended Interaction is used as the basic model for creating holistic concepts because it provides new ways of designing the work environment of control room operators by starting from digital and real-world perspectives. The design approach is illustrated by examples of interaction and visualization concepts for different domains. The thesis explains how this strategy can be successfully applied, and concludes that promising results can be expected from a study of further control room operations.

Wang, Yunlong

Designing Digital Health Interventions for Sedentary Behavior Change (2019)

Sedentary lifestyles become increasingly ubiquitous along with the development of industry and technology, due to the reduced necessity of physical activity during work. Unfortunately, evidence has shown that prolonged sedentary behavior is a risk factor to many chronic diseases (e.g., obese, type 2 diabetes, cardiovascular diseases, and colon cancers), independently from moderate- and vigorous-intensity physical activity. Therefore, it is urgent to improve the sedentary lifestyle to prevent these diseases.

Comparing with traditional intervention programs, digital health interventions have several advantages: (1) they are easier to scale up, (2) they could be more lightweight and cheaper, and (3) they could enable real-time and context-aware interventions. Therefore, in this dissertation, we focus on the technologies of digital health interventions for sedentary behavior change. We developed and evaluated new technologies based on daily-use digital devices (i.e., smartphones and PCs) for sedentary behavior change, as well as explored the potential impact of the emerging digital devices (i.e., augmented-reality head-mounted displays) on users’ movement behavior.

Due to the interdisciplinary nature of this research field, digital health (eHealth), this dissertation involves theories and practice from health psychology, physiology, public health, human-computer interaction, and data mining. In the beginning, we conducted a systematic review on technologies for sedentary behavior change at work. Through this review, we noticed several limitations in prior work, including the inconsistency of using taxonomies and the lack of applying behavioral theories. Therefore, we then proposed a holistic framework, TUDER (Targeting, Understanding, Designing, Evaluating, and Refining), for designing and reporting digital health interventions by integrating existing taxonomies and theories from different communities.

Following our proposed framework, we conducted two intervention studies for reducing the sedentary behavior of office workers and college students. In the first study, we developed a mobile app – SedVis – visualizing users’ mobility and sedentary patterns extracted by our proposed data mining algorithm to support action planning of sedentary behavior change. The results showed that using novel visualizations of mobility patterns might be a promising avenue for reducing sedentary time by facilitating self-monitoring of the behavior and providing engaging feedback.

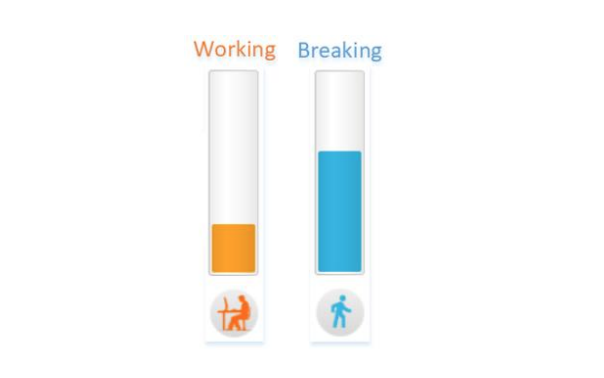

Our second intervention study aimed to evaluate two context-aware PC reminders, the point-of-choice prompt and our proposed SedBar (an always-on progress bar), regarding the effects on users’ sedentary behavior change and perceived usefulness and interruption. This study suggested that context-aware PC reminders hold great potential for sedentary behavior change. The point-of-choice prompt might be a promising tool for reducing sedentary time for screen-based workers. Users perceived the SedBar as useful, but the effectiveness should be further investigated.

Besides light-weight and practical software applications on smartphones and PCs, we also explored the potential impact of the augment-reality head-mounted displays (e.g., HoloLens) on office workers’ movement behavior. By comparing the participants’ movement behavior under different levels of movement freedom and flexibility when performing tasks using HoloLens, we provided several design implications for future applications on AR-HMDs from the health perspective.

Lastly, in the dissertation, we stepped out of the scope of sedentary behavior change and overviewed the research on general health behavior change in the HCI community. Following our proposed holistic framework of digital health interventions, we discussed the patterns and trends in the field of health behavior change research in HCI. Based on the reviewed studies, we provided implications and research opportunities for future work.

PDF