Former Media Room

Multimodal Interaction & User Interface Design

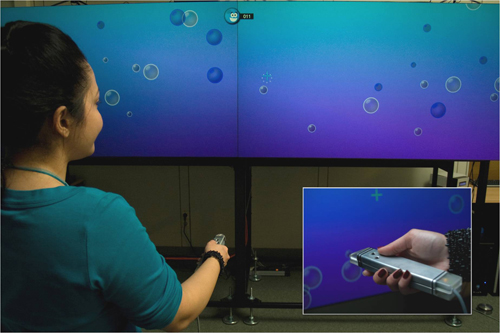

The Media Room provides a research environment for the design, development, and evaluation of novel interaction techniques and input devices as well as a simulation facility for future collaborative work environments. Therefore, it offers different input (e.g. multi-touch, laserpointer, hand-gestures, body tracking, eye-gaze, speech) and output devices (tabletop, HD-Wall & vertical-aligned displays, 4K display, audio & tactile feedback) which can be used simultaneously and in combination, creating a new dimension of multi-modal interaction.

In the following, we will outline some of these input and output devices.

Tangible user interfaces allow users to interact directly with the interface by placing objects on the surface (Tokens) or using their hands.

Besides, the multi-touch technology makes this experience even more natural and furthermore allows simultaneous usage by several people. This makes such a combination of input-output device extremely useful for collaborative work as well as for leisure activities, e.g. searching for a hotel that meets optimaly personal requirements.

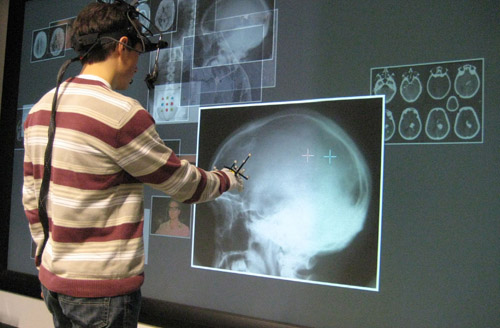

Laserpointer interaction provides a high flexibility (the device is mobile) and a natural interaction (absolute pointing); but hand-tremor leads to a high amount of jittering.

We develop filter techniques that unnoticeably reduce this jitter and result in significantly lower error-rates when hitting small targets.

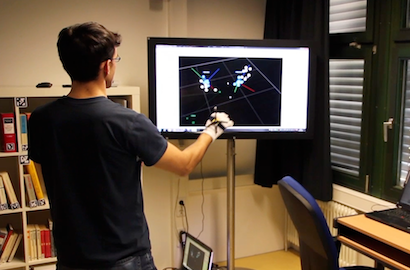

One major challenge for future human-computer interaction is the design of a cross platform user interface paradigm, which provides a consistent interaction and presentation style on different output devices, ranging from mobile devices to large high-resolution displays. We are currently introducing a novel concept based on a zoomable object-oriented information landscape (ZOIL) which meets these requirements. Besides, interacting with such an environment should also be similar for different input modalities, such as eye-gaze interaction, hand-gestures, or using gestures in combination with a mobile phone camera. A common interaction library named Squidy provides the linkage between these different devices and allows a flexible design and evaluation.

External Page

To view this content (source: www.xyz.de ), please click on Accept. We would like to point out that by accepting this iframe, data could be transmitted to third parties or cookies may be stored.

You can find more information on our privacy policy .

This video shows how we use our software frameworks "ZOIL UI framework" and "Squidy Interaction Library" to design multimodal, multi-display and multi-touch work and knowledge work environments such as shown in our Media Room at the University of Konstanz.

Squidy integrates different input modalities (ranging from multi-touch input to laser pointer-, eye- or gesture-tracking) into an input abstraction layer, on which ready-to-use signal processing filters and data pipes can be visually composed and iteratively improved by the interaction designer or application developer. This Squidy layer is then accessed by the application to receive the desired low- or high-level multi-modal input events. Squidy seamlessly integrates existing operating systems and applications (e.g. Microsoft Windows 7) through standard protocols such as TUIO.

The ZOIL UI Framework supports interaction designers to build feature-rich zoomable user interfaces as shared visual workplaces following the ZOIL (Zoomable Object-oriented Information Landscape) paradigm. The ZOIL framework facilitates the construction of a large visual and zoomable workspace as application user interface. This UI can be shared across display and device boundaries and presents all content and functionality in an object-oriented manner using semantic zooming. Each ZOIL client (e.g. a Microsoft Surface table, peripheral high-definition cube displays connected to a PC, Tablet-PC, Pad, or UMPC) can be used to navigate and manipulate the content and functionality within this information landscape independently or can also be synchronized with other clients to serve as an overview or detail view. Building upon Squidys input layer, ZOIL applications can easily make use of novel input devices such as Anoto pens or the Nintendo Wiimote or make use of Microsoft Surface SDK features even on non-Surface multi-touch tabletops.

External Page

To view this content (source: www.xyz.de ), please click on Accept. We would like to point out that by accepting this iframe, data could be transmitted to third parties or cookies may be stored.

You can find more information on our privacy policy .

Related Projects

- Blended Library: The goal of the project Blended Library is to develop new concepts to support the information seeking process and collaboration inside of the physical library of the future. There, users can resort on well-known and analog devices (e.g. pen & paper) as well as multimedia enriched technologies (e.g. multi-touch tabletops) for knowledge work and intermediation.

- inteHRDis: Within the inteHRDis project the Media Room is used to research novel input devices and interaction techniques which then again can be used to support other Media Room scenarios. In combination with a unified interaction library (Squidy) the Media Room serves as a testing and design environment, especially for multimodal interaction techniques.

- Permaedia: The permaedia project demonstrates a novel user interface paradigm for personal nomadic media. It is based on a zoomable object-oriented information landscape (ZOIL) and is meant to provide users with a consistent interaction style independent from device or display size. The Media Room provides the necessary environment to design this UI as well as to test and observe whether it succeeds in providing a device and display independent interaction concept.

- MedioVis: The MedioVis project aims to provide a "Knowledge Media Workbench" that assists information workers correspondingly to their creative workflow. Therefore, the user has to be supported in various situations and environments. The Media Room offers good opportunities to simulate public walls, tabletop terminals, the work with mobile devices and much more.

- Blended Interaction Design: The goal of this project is to provide tool support for the creative design process of novel interaction and interface concepts. Since this is in many cases a highly collaborative activity, an appropriate environment is needed, which supports working individually on a user interface prototypes as well as sharing these sketches, models, ideas, or code with others. The MediaRoom provides this environment with its different workstations ranging from handheld computers to shared high-resolution displays.

- Holistic Workspace: The project "Holistic Workspace" is concerned with the following questions: "How will the workspace of control rooms in the future look like? How can new technologies and supporting systems assist operators with their daily work?"

- Blended Museum: This project’s scope embraces the combination of virtual and physical museums to enhance the visitor’s experience by focusing on innovative interaction techniques, information design and mediation strategies. The MediaRoom allows us to develop and test these techniques in a controlled environment.

- eLmuse: Within the scope of this project, the different scenarios, interaction techniques, and devices are empirically evaluated with users. Thereby, the focus is on longitudinal evaluation methods which provide possibilities to better address learning aspects and by also increasing the ecological validity of the experiments.