Real-Time Optimization of XR User Interfaces

Duration

01.02.2022 - 30.06.2023; 01.07.2023 - 30.06.2027

Members

Tiare Feuchtner, Abdelrahman Zaky

Description

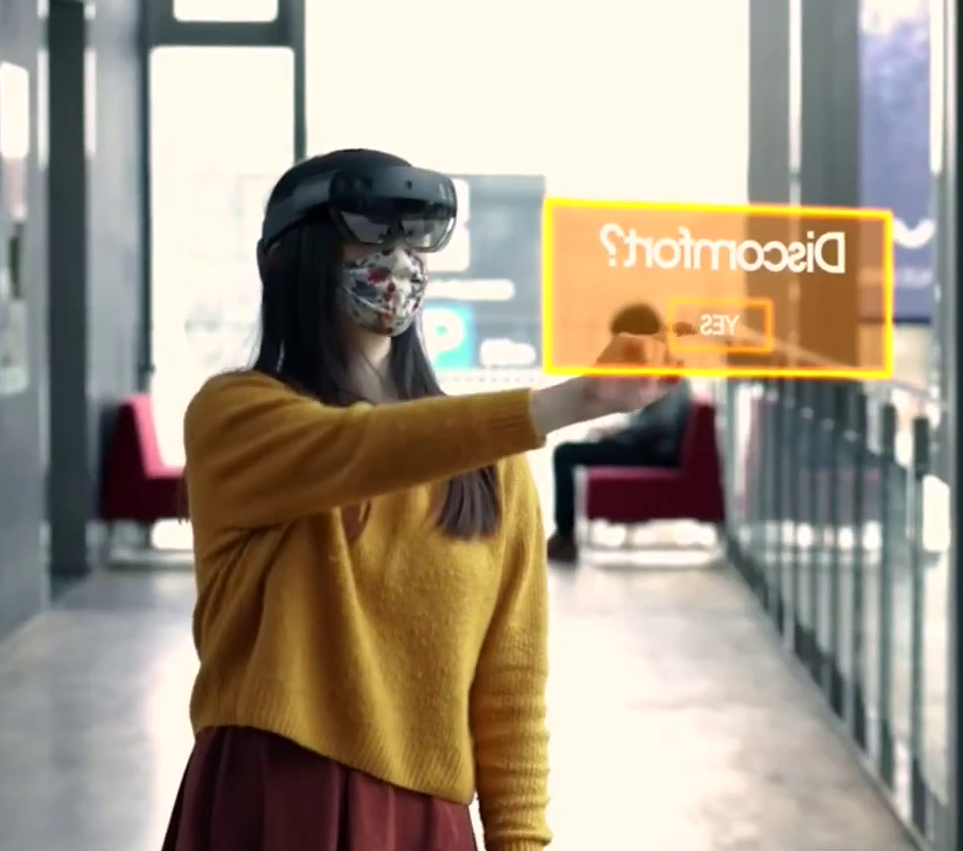

In this project we aim to dynamically adapt the user interface during interaction with Cross-Reality (XR) applications through head-mounted displays (HMDs), to improve usability and ensure the users safety and comfort.

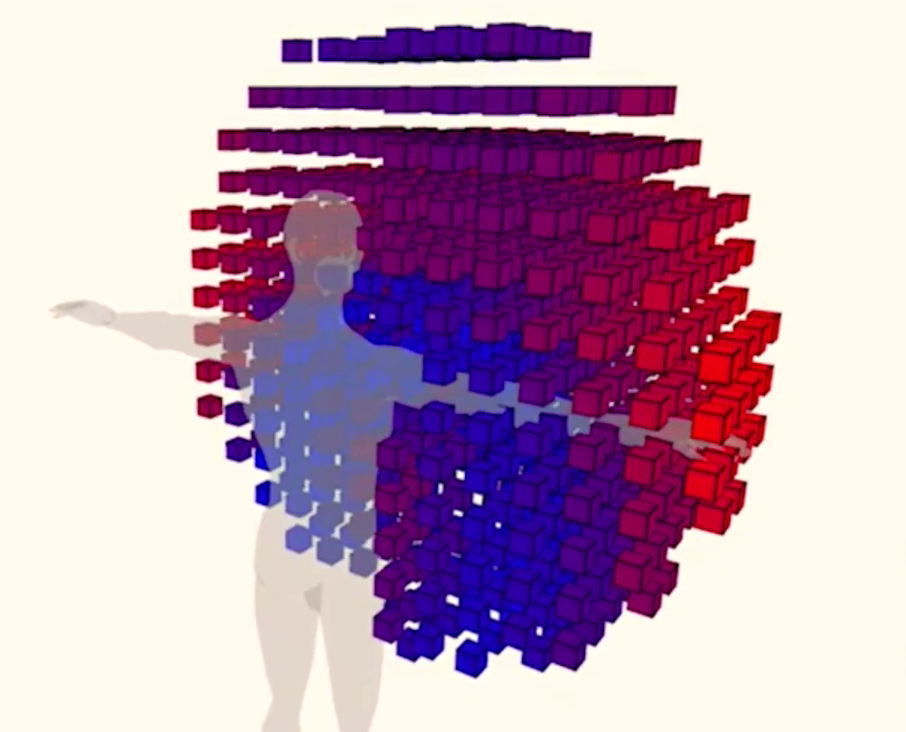

XR refers to any systems that immerse the user in an interactive virtual or virtually augmented environment, such as Virtual Reality (VR) or Augmented Reality (AR). The virtual content is often placed in the space around the user’s body and can be directly manipulated with hands or controllers. This shift of virtual content from the 2D space of the computer screen to the 3D environment brings along a myriad of new challenges in user interface (UI) and interaction design, due to the complex and dynamic context of interaction. Contexts to consider include the user, the physical environment, and the activity. For example, an AR application presenting a virtual button in mid-air in front of the user may occlude their view of their conversation partner, lead them to knock over the glass of water on the table when trying to reach it, and cause muscle fatigue if interacted with continuously.

We aim to address these challenges by optimizing 3D UIs with regards to the placement of information and interactive elements, as well as the employed interaction techniques and feedback, depending on the context of interaction.

Research Questions

- What UI characteristics can and should be adapted and how (e.g., placement, appearance, interaction technique, user representation, immersion, physicality)?

- What are meaningful characteristics of the interaction context and how can they be quantified to facilitate real-time computation of optimization functions?

- How can we handle conflicting optimization objectives and make their resolution apparent to the designer or user?

- How much customizability does the user need during interaction, ranging from pre-defined modes to a fully adaptive system that learns over time?

- How can we adapt the UI to effectively support collaboration and social interaction between multiple users?

- What are meaningful and measurable success criteria for validating optimization results in user studies?