inteHRDis

Interaction Techniques for High Resolution Displays

Duration

2006 - 2011

Members

König, Rädle, Reiterer, Bieg, Schmidt, Foehrenbach, Gerken, Jetter, Dierdorf, Jenabi, Nitsche, Weidele

Description

Project part 5 of "information at your fingertips - interactive visualization for gigapixel displays"

Flyers

- Project:

- Interaction Library:

- Interaction Techniques and Modalities:

- Application Domains:

- Evaluation Methods:

News

- Cloud Browsing @ YOU_ser 2.0

The research project and artistic installation »Cloud Browsing« by Bernd Lintermann, Torsten Belschner, Mahsa Jenabi and Werner A. König is exhibited from 1st may until the end of the year at the ZKM | Center of Art and Media in Karlsruhe. It introduces a new way of searching information in Wikipedia with related images instead of hypertext. Visitors can enjoy touch and reality-based bodily interaction using iPod Touch as an input device for controlling a 360° PanoramaScreen. Cloud Browsing is a cooperation project of the ZKM | Institute for Visual Media and the Human-Computer Interaction Group of the University of Konstanz. Video

- HCI Group on 3sat/ZDF: Television show features Media Room

Today's NEUES...die Computershow on 3sat/ZDF featured our HCI Group in two segments. Therein, several design concepts within our Media Room were presented in the context of novel, intuitive user interfaces and input modalities, such as multitouch or gestural input. Furthermore, our very own Werner König was interviewed during the show on topics such as the relevance of virtual worlds (e.g. PS3 "Home") and the current state of the art of multitouch interfaces. He also gave an outlook on the future of our field. The show will be repeated several times during next week and the show can be seen at YouTube or downloaded on the 3sat page: Video-Podcast (HCI features starts at 7:30min).

- 4 Contributions of the project inteHRDis at the CHI 2009 in Boston

The members of the project inteHRDis presented two Work-in-Progress papers with the titles "Squidy: A Zoomable Design Environment for Natural User Interfaces" [PDF] and "Adaptive Pointing - Implicit Gain Adaptation for Absolute Pointing Devices" [PDF] at the CHI'09: Conference on Human Factors in Computing Systems. Additionally, Mr. Joachim Bieg presented his PhD topic "Gaze-Augmented Manual Interaction" [PDF] at the Doctoral Consortium. Furthermore, the paper "Understanding and Designing Surface Computing with ZOIL and Squidy" [PDF] was presented at the workshop Multitouch and Surface Computing.

- Globorama @ Ideenpark 2008

The artistic/scientific installation »Globorama« (Title: Exploration of Living Space) was exhibited from 16.05. to 25.05.2008 at the Ideenpark 2008 in Stuttgart. With a diameter of more then 10 meters it was the biggest installation at the new Stuttgart Trade Fair Centre. Surrounded by 360°-satellite images of the earth, the 290.000 visitors could travel the entire globe with the inteHRDis Laserpointer and at selected points submerge in panoramic photos of the respective location.

Press Clipping:

» Suedkurier - HCI Konstanz @ Ideenpark 2008 [PDF] (15.05.2008)

» do it.online - Mit dem Laserpointer um die Welt (21.04.2008)

- Laserpointer-Interaction in a nutshell

Short facts about our novel Laserpointer-Interaction [PDF]

- Talk @ fmx/08

05.05.2008: Invited talk "Next generation input devices for interactive visualization" at the fmx/08, Event Visual Computing, Stuttgart.

- Laserpointer-interaction meets arts

The inteHRDis Laserpointer-Interaction is part of the artistic/scientific installation »Globorama«, which opens the PanoramaFestival at the ZKM | Center for Art and Media Karlsruhe. Feel a novel way of interaction from 29.09. to 28.10.2007 at the ZKM_Media Theater in Karlsruhe.

Press Release:

» Laserpointer-Interaktion am Globorama [PDF]

Press Clipping:

» Heise.de (24.09.2007)

» Cluster Visual Computing (24.09.2007)

» Südkurier [PDF] (27.09.2007)

» ka-news.de (30.9.2007)

» NATIONAL GEOGRAPHIC (01.10.2007)

- Video Laserpointer-Interaction

Demo-Video available: Laserpointer-Interaction between Art and Science

Project Description

With recent advances in technology, high resolution displays (HRD) are gaining more and more attention in research and the industrial community. Considering current technological progress, resolutions with more than a billion pixels on large or even standard sized displays seem possible within the next decade. In future, we probably might be surrounded by HRDs as standard displays, TVs, advertising walls, collaborative working environments, and interactive wallpapers.

HRDs are capable of visualizing complex and large data without sacrificing context or detail. They open new perspectives for collaborative work in multi user / multiple devices environments and offer great opportunities for pinboard-like spatial information management. With the new capabilities the users get confronted with so far unknown information amount and density on interactive displays. HRDs introduce a number of interaction challenges not addressed by traditional input devices and conventional graphical user interface metaphors.

The purpose of the research project "Interaction Techniques for High Resolution Displays" funded by Förderprogramm Informationstechnik Baden-Württemberg (BW-FIT) as project part 5 of "information at your fingertips - interactive visualization for gigapixel displays" is the analysis of existing and conception of new interaction and visualization techniques for high resolution displays in consideration of human capabilities and restrictions. In particular Zoomable User Interfaces approaches with Semantic Zooming are investigated regarding their applicability on HRDs in a consistent and user oriented concept. Also the comparison of recently proposed input mechanisms based on tracking systems with unconventional input devices for HRDs like PDAs, UMPCs (Ultra Mobile PCs) and game controllers are in the scope of the project. Thereby PDAs are considered as very promising, since they can offer complex and parallel user interaction with additional visual feedback and orientation possibilities due to their built-in display.

Furthermore the conception and implementation of a generic interaction framework for high resolution displays based upon the preceding analysis is a part of the project. Besides integrating PDA-Interaction, 3D-Flysticks, Hand-Gestures, mobile Eye-Trackers and further flexible input devices into the interaction framework, we particularly focus on Laserpointer-Tracking, which allows very intuitive and direct interaction with large, high resolution displays without restricting user's position and distance to screen.

A key issue with regard to Semantic Zooming and evaluations is tracking of user's position, orientation and her attention in single or multi user environments. Primarily fundamental question regarding screen capturing, user tracking and interaction logging should be answered. Upcoming empirical evaluations will focus on interaction and visualization techniques, diverse input devices and the designed framework itself.

For further information, comments or questions don't hesitate to contact us. Download a project flyer right here.

The PowerWall installed at the University of Konstanz provides an optimal research environment for this project. With a resolution of 4640 x 1920 pixels and a dimension of 5.20 x 2.15 meters this is one of the most capable high resolution displays in Europe.

inteHRDis Laserpointer-Interaction applied to the ZKM PanoramaScreen at the Center of Art and Media in Karlsruhe. The 360° Panorama-Screen has a diameter of 8 meters with a resolution of 8192 x 928 pixels and is driven by 6 SXGA+ Projectors.

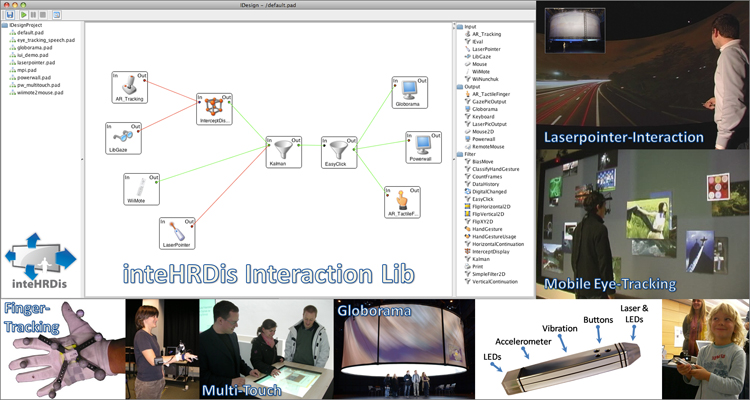

Upper-left: Visual user interface of the Squidy Interaction Library developed in the project inteHRDis. Around: Diverse input devices and application domains realized with Squidy. Download Squidy Short Paper / Flyer / Poster

External Page

To view this content (source: www.xyz.de ), please click on Accept. We would like to point out that by accepting this iframe, data could be transmitted to third parties or cookies may be stored.

You can find more information on our privacy policy .

Overview of diverse input devices and interaction techniques developed at the Human-Computer Interaction Group e.g. Multi-Touch-Interaction, Finger- & Gesture-Tracking, Eye Gaze Interaction, Laserpointer Interaction...

The video shows our Interaction Library Squidy which eases, the design of natural user interfaces by unifying relevant frameworks and toolkits in a common library. Squidy provides a central design environment based on high-level visual data flow programming combined with zoomable user interface concepts. The user interface offers a simple visual language and a collection of ready-to-use devices, filters and interaction techniques. The concept of semantic zooming enables nevertheless access to more advanced functionality on demand. Thus, users are able to adjust the complexity of the user interface to their current need and knowledge. See the Squidy project website for more details.

Publication list