Squidy

Duration

2007 - 2012

Members

Rädle, König, Reiterer, Schmidt, Nitsche, Klinkhammer, Bieg, Foehrenbach, Weidele, Dierdorf

Description

Squidy Interaction Library

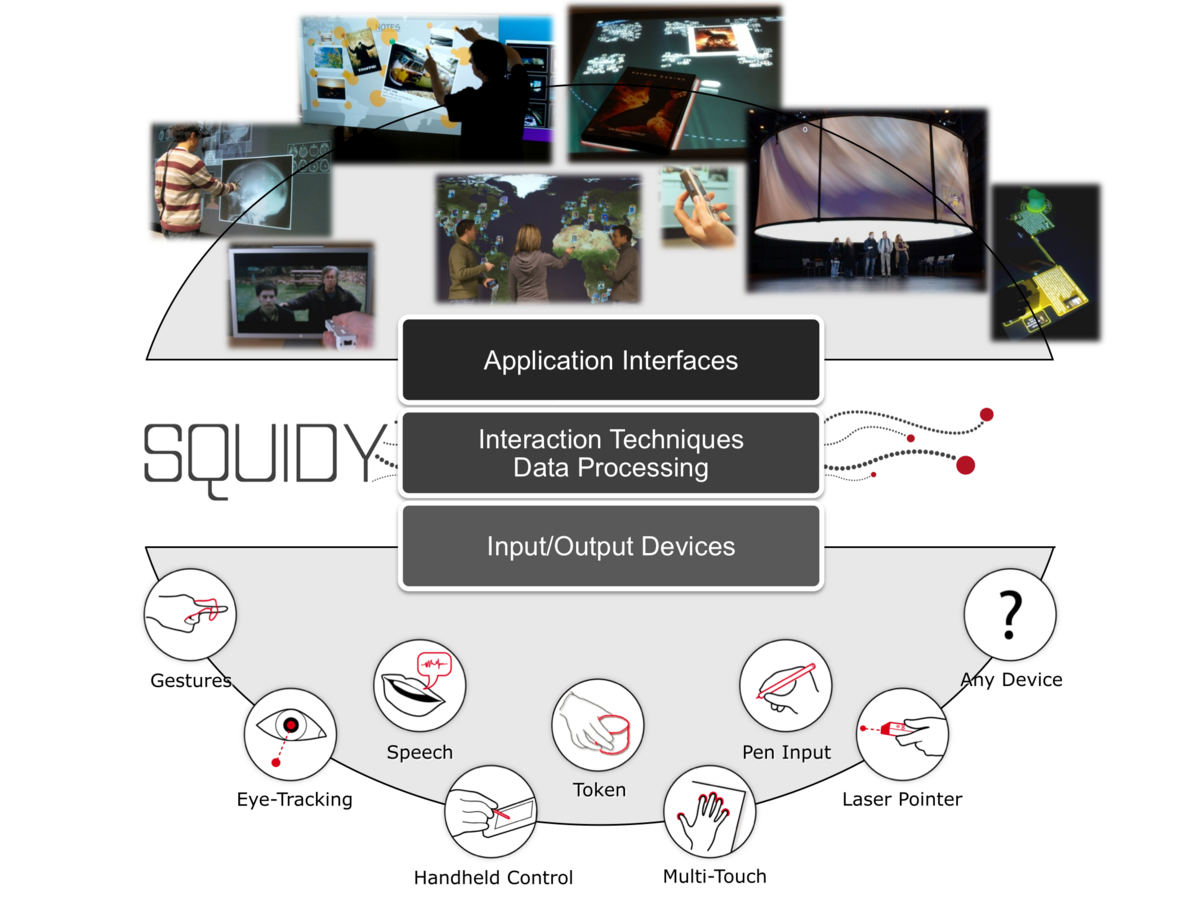

Squidy is an interaction library which eases the design of natural user interfaces (also known as "post-WIMP interfaces") by unifying various device drivers, frameworks and tracking toolkits in a common library and providing a central and easy-to-use visual design environment. Squidy offers diverse input modalities such as multi-touch input, pen interaction, speech recognition, laser pointer-, eye- and gesture-tracking. The visual user interface hides the complexity of the technical implementation from the user by providing a simple visual language based on high-level visual data flow programming combined with zoomable user interface concepts. Furthermore, Squidy offers a collection of ready-to-use devices, signal processing filters and interaction techniques. The trade-off between functionality and simplicity of the user interface is especially addressed by utilizing the concept of semantic zooming which enables dynamic access to more advanced functionality on demand. Thus, developers as well as interaction designers are able to adjust the complexity of the Squidy user interface to their current need and knowledge. The interaction library Squidy was developed in course of the research project inteHRDis - Interaction Techniques for High Resolution Displays.

The Squidy Interaction Library is free software and is published under the licence of the LGPL: http://squidy-lib.de

News

- Squidy is published now at the Journal on Multimodal User Interfaces (Springer): Interactive Design of Multimodal User Interfaces - Reducing technical and visual complexity, Feb 2010.

- "Zoom! Squidy Brings Together Natural Interaction, Puts Standard TUIO on MS Surface": online-article about the Squidy Interaction Library by Peter Kirn

- The Squidy Interaction Library is available Open Source on GitHub (http://squidy-lib.de). A Squidy "SurfaceToTuio" component is available at https://sourceforge.net/projects/squidy-lib/

- "Adaptive Pointing – Design and Evaluation of a Precision Enhancing Technique for Absolute Pointing Devices": conference talk about the Squidy filter Adaptive Pointing at the Interact'09: twelfth IFIP conference on Human-Computer Interaction, Sweden

- "Designing innovative Interaction modalities with Squidy": talk about Squidy at the Eighth Heidelberg Innovation Forum 2009

- HCI-Group applies for a European patent: Adaptive Pointing - Implicit Gain Adaptation For Absolute Pointing Devices

Open Source

The Squidy Interaction Library is now available to the public. Here is the link:

Squidy on GitHub: http://squidy-lib.de

Squidy - User Interface Design

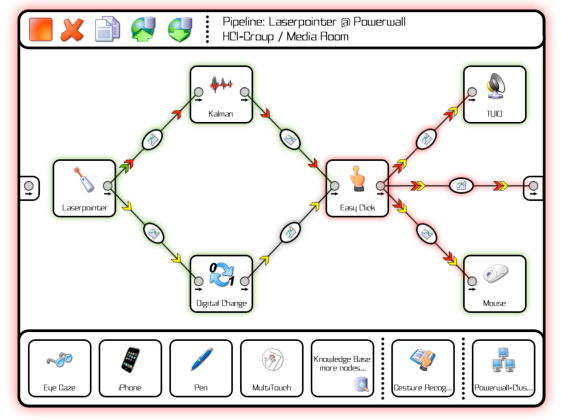

The basic concept which enables the visual definition of the dataflow between the input and output is based on a pipe-and-filter concept. This offers a very simple but powerful visual language to design the interaction logic. The user thereby selects the input device or hardware prototype of choice as “source”, connects it successively with filter nodes for data processing such as compensation of hand tremor or gesture recognition and routes the refined data to the “sink”. This can be an output device such as a vibrating motor for tactile stimulation or LEDs for visual feedback. Squidy also provides a mouse emulator as output node to offer the possibility to control standard WIMP-applications with unconventional input devices. Multipoint applications (e.g. for multi-touch surfaces or multi-user environments) and remote control are supported by an output node which transmits the interaction data as TUIO messages over the network. The internal dataflow between the nodes in Squidy consists of a stream of single or multiple grouped data objects of well-defined data types based on the primitive virtual devices introduced by Wallace [The semantics of graphic input devices, SIGGRAPH’76]. In contrast to the low-level approaches used in related work, such abstracting and routing of higher-level objects has the advantage that not every single variable has to be routed and completely understood by the user. The nodes transmit, change, delete data objects, or generate additional ones (e.g. if a gesture was recognized).

Semantic Zooming - Details on Demand, Interactive Configuration & On-the-fly Compilation

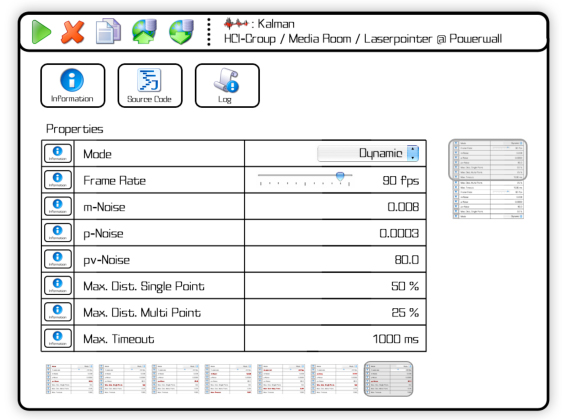

When zooming into a node, additional information and corresponding functionalities appear, depending on the real estate available (semantic zooming). Thus, the user is able to gradually define the level of detail (complexity) according to the current need for information. In contrast to the related work the user does not have to leave the visual interface and to switch to additional programming environments in order to generate, change or just access the source code of device drivers and filters. In Squidy, zooming into a node reveals all parameters and enables the user to interactively adjust the values at run-time. This is highly beneficial for empirically finding suitable parameters for the current environment setting (e.g. Kalman filter: noise levels). Furthermore, the user can zoom into the information view which provides illustrated information about the node functionality itself and its parameters. The user may even access the source code of the node by semantic zooming. Thus, code changes can be made in the visual user interface. If the user zooms out, the code will be compiled and integrated on the fly. The instant integration enables a very agile and interactive design and development process.

View of a simple pipeline in Squidy. The pipeline receives position, button, and inertial data from a laser pointer, applies a Kalman filter, a filter for change recognition and a filter for selection improvement and finally emulates a standard mouse to interact with conventional applications. At the same time the data is sent via TUIO to listening applications. The pipeline-specific functions and breadcrumb navigation are positioned on top. The zoomable knowledge base with a selection of recommended input devices, filters, and output devices are located at the bottom.

The revealed parameters of a zoomed node. This view allows direct manipulation of the node parameters and thus an interactive adjustment of values at run-time.

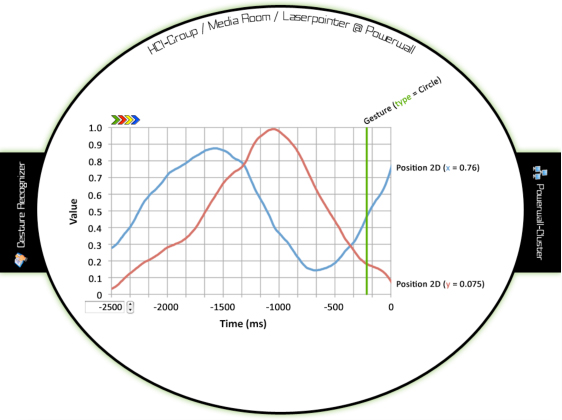

Dataflow visualization allows fast access to the current dataflow between two nodes. Users benefit from the insight into the interaction dataflow.

Squidy - Key Features

- Multi-threading: The possibility for multiple in and out connections provides a high flexibility and the potential for massive parallel execution of concurrent nodes. Each node generates its own thread and processes its data independently as soon as it arrives. This effectively reduces the processing delay that could have a negative effect on the interaction performance.

- Reusability & comparability: Nodes are completely independent components, offer high reuse, are free from side effects, and can be activated separately e.g. for comparative evaluations.

- Less demanding: Semantic zooming enables users to adjust the complexity of the user interface to their current need. Moreover, users may use filters and devices as “black boxes” without any knowledge of the technical details and thus concentrate on the design.

- Dataflow visualization: The visual inspection of the current dataflow helps to identify possible issues and facilitates fast error correction at runtime.

- Interactive configuration: Changes in the dataflow and configuration of node parameters are instantly applied and thereby can change the interaction. This supports fast and interactive design iterations.

- Visual interaction design: The pipe-and-filter concept augmented with semantic zooming offers a very simple, but powerful visual language for the design and development of natural user interfaces.

For further information, comments or questions don't hesitate to contact us. Find some more details in the overview paper puplished at CHI'09 (WiP) or in the project flyer.

Interaction Modalities

Gaze Augmented Manual Interaction - Progress in the design of affordable, robust, and comfortable eyetracking systems promises to bring eye-tracking out of the research laboratory to new applications in HCI: from built-in eye-trackers in laptop screens to wearable systems that locate the user's point of regard on large, wall-sized displays of tomorrow's workplaces. In preparing for this, new techniques will be developed in this research. Paper, Flyer

Touch Interaction - Devices operated by direct multitouch input offer great opportunities for novel interaction techniques and user interfaces. Our research efforts aim towards the development of concepts that exploit these opportunities and provide users natural and reality-based environments. For designing and implementing our concepts, we use several multitouch tabletops and diverse software libraries for finger- and object tracking. Furthermore, we have developed software for transferring applications across tabletops, e.g. our Surface2Tuio library allows the usage of any TUIO application on a Microsoft Surface device (see www.tuio.org). Paper (Surface Computing), Paper (Text Input), Flyer

Multimodal Interaction with Mobile Phones - Conventional input devices such as mouse and keyboard restrict users' mobility by requiring a stable surface on which to operate and thus impede fluid interaction. Mobile phones are technology-rich devices that people carry almost everywhere almost all the time. From storing personal data to playing games and surfing web, mobile phones help their users to get quickly what they need. The rich capabilities of mobile phones with their smart integrated sensors make them promising input devices in ubiquitous computing environments. Paper, Flyer, Video

Adaptive Pointing - Adaptive Pointing is a novel approach to addressing the common problem of accuracy when using absolute pointing devices for distant interaction. The intention behind this approach is to improve pointing performance for absolute input devices by implicitly adapting the Control- Display gain to the current user's needs without violating users' mental model of absolute-device operation. Paper, Flyer

Hand Gesture Interaction - Hands are our main tools to manipulate objects. Gesticulation can complement or even substitude language. If hands are so valuable to us, why not use them in a more direct manor for human-computer interaction then they already are? Inspired by findings of Adam Kendon on gesticulation in every day use we identified suitable gestures which can be used for interaction with large high-resolution displays. Paper, Flyer, Thesis

Token Interaction - A token is a physical component, equipped with digital properties through information and communication technology. By using a physical object as an interaction device users can highly benefit from their pre-existing knowledge of the everyday, non-digital world. This results in a very intuitive and natural way of human-computer interaction. Flyer

Digital Pen & Paper - Creative professionals and designers often work with paper to sketch early and incomplete ideas as it has unique handling properties that support the creative flow. Digital pen and paper technology allows to capture handwriting and sketches on real paper for integration into software solutions. Using this technology, creative professionals do not have to change their existing workflows, but may additionally augment their physical work with computational tools. Interactive pens may also be used as multimodal input devices on projected displays and tabletops.

Application Domains

Project MedioVis - The MedioVis 2.0 project aims to provide a natural interface for seeking and exploring multimedia libraries. Knowledge work is a demanding activity caused on the one hand by the increasing complexity of today’s information spaces. On the other hand, knowledge workers are acting correspondingly to an individual creative workflow, which involves multifaceted characteristics like diverse activities, locations, environments and social contexts. MedioVis 2.0 tries to support the entire workflow in one coalescing Knowledge Media Workbench, showcased in the context of digital libraries. Link, Flyer

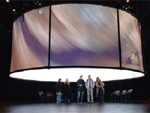

CloudBrowsing - Research project and interactive installation by Bernd Lintermann, Torsten Belschner, Mahsa Jenabi and Werner A. König exhibited from 1st may 2009 until the end of the year at the ZKM | Center of Art and Media in Karlsruhe. It introduces a novel way of browsing and searching information in Wikipedia with related images instead of hypertext. Visitors enjoy touch and bodily interaction using an iPod Touch as the input device for controlling a 360° PanoramaScreen. Cloud Browsing is a cooperation project of the ZKM | Institute for Visual Media and the Human-Computer Interaction Group of the University of Konstanz. Poster

Media Room - The Media Room provides a research environment for the design, development, and evaluation of novel interaction techniques and input devices as well as a simulation facility for future collaborative work environments. Therefore, it offers different input (e.g. laser pointer, hand-gestures, multitouch, eye-gaze, speech) and output devices (Table-Top, HD-Cubes, audio & tactile feedback) which can be used simultaneously and in combination, creating a new dimension of multimodal interaction. Link, Flyer

Project permaedia - The permaedia project explores novel user interfaces for novel applications of the personal digital media for the coming decade. Our research is focused on addressing the user needs in a rapidly changing world in which mobility and urbanity become the key influences on digital media use. permaedia will demonstrate how our future personal and working life can be enriched by novel nomadic applications and user interface concepts which provide a unified pervasive access to personal and public information spaces with all kinds of mobile and stationary devices in a consistent easy-to-use, easy-to-learn and "intuitive" manner. Link, Flyer

Globorama - Interactive installation with 360° panoramic screen, 8 channel sound and laser pointer interaction. Using innovative representational forms of satellite images, Globorama investigates new navigable information space: Surrounded by 360°-satellite images of the earth, visitors travel the entire globe with a laser pointer and at selected points submerge in panoramic photos of the respective location. In cooperation with Bernd Lintermann, Joachim Böttger & Torsten Belschner. Exhibited from 09/29 to 10/28/2007 at the ZKM | Center for Art and Media in Karlsruhe as first installation of the ZKM PanoramaFestival and from 05/16 to 05/25/2008 at the ThyssenKrupp Ideenpark 2008 in Stuttgart (~290.000 visitors). Globorama is a cooperation project of the ZKM | Institute for Visual Media and the University of Konstanz. Paper, Poster

Project Blended Interaction Design - The project Blended Interaction Design investigates novel methods and techniques along with tool support that result from a conceptual blend of human-computer interaction with design practice. Using blending theory with material anchors as a theoretical framework, we frame both input spaces and explore emerging structures within technical, cognitive, and social aspects. Based on our results, we will describe a framework of the emerging structures and will design and evaluate tool support within a spatial, studio-like workspace to support collaborative creativity in interaction design. Link, Flyer

Videos

External Page

To view this content (source: www.xyz.de ), please click on Accept. We would like to point out that by accepting this iframe, data could be transmitted to third parties or cookies may be stored.

You can find more information on our privacy policy .

This video shows a small demo of the Squidy Interaction Library with the focus on its user interface concept.

External Page

To view this content (source: www.xyz.de ), please click on Accept. We would like to point out that by accepting this iframe, data could be transmitted to third parties or cookies may be stored.

You can find more information on our privacy policy .

This video shows how our workgroups software frameworks ZOIL UI framework and Squidy Interaction Library can be used for designing multi-modal, multi-display and multi-touch work environments such as our Media Room at the University of Konstanz.

Publication list